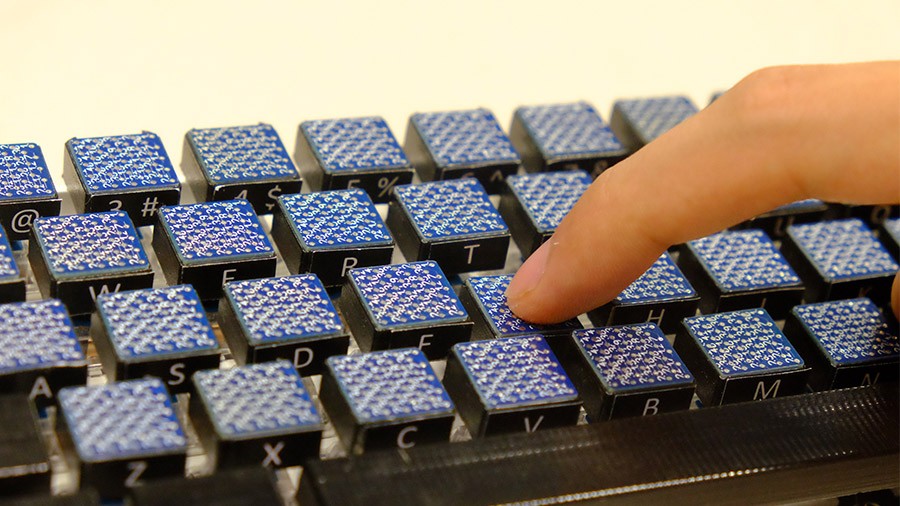

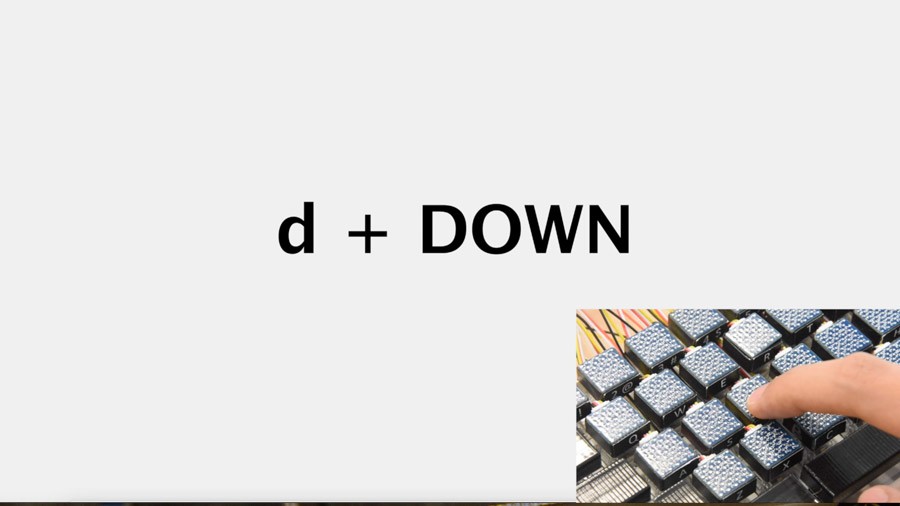

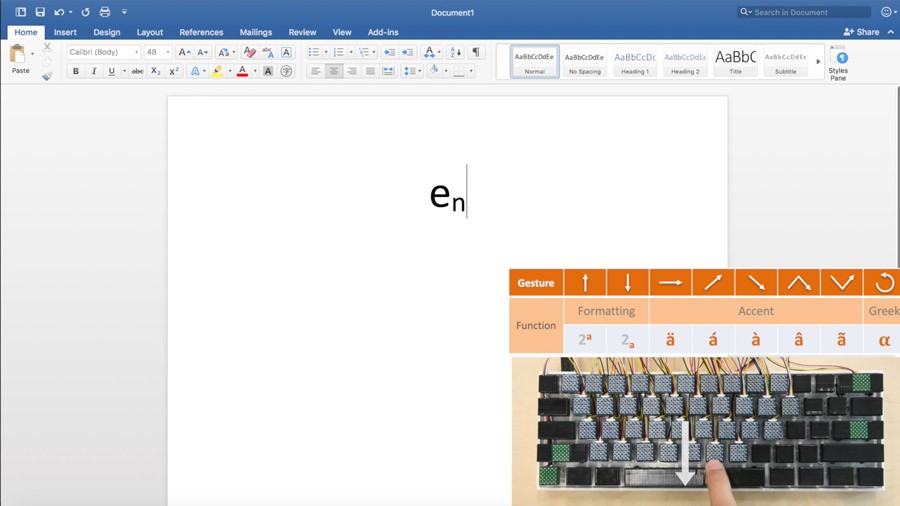

A key on a physical keyboard is a normally-open switch, thus having only two states: ON or OFF. As such, with single key presses, the input space is limited by the number of keys on a keyboard. Based on the fact that a user’s fingers inevitably touch the keycaps as they press on keys and interacting with a keyboard, the key concept of GestAKey is to leverage the keycap surface as a touchpad to enable touch-based interaction on it. Our work integrates the keystroke with small area touch gesture and features in following two ways. First, by integrating touch gesture on individual keycaps, we avoid posture switch. Second, we leverage the press and release event of a key as a way to segment the touch gesture, which eliminates the false trigger problem.

GestAKey consists of two interacting systems: 1) The hardware that reads touch data from keycaps, and 2) The software that listens for key events, recognize gestures, and triggers the corresponding output. With GestAKey, users are allowed to perform diverse touch-based gestures after pressing down a key and before releasing it. By integrating the information of the touch gesture and the key events, a keystroke could be assigned to various meanings.